HowTo mirror a phpBB3 forum via wget

I ran a phpBB3 forum for several years and activity has been slowing down step by step. Now I thought I'd get rid of that old, security-impaired (read seldomly updated) phpBB3 forum and mirror it as plain HTML files. After Googling a bit I found an entry at superuser.com stating that I could use wget which I thought of as "brilliant"!

Using

wget -k -m -E -p -np -R memberlist.php*,faq.php*,viewtopic.php*p=*,posting.php*,search.php*,ucp.php*,viewonline.php*,*sid*,*view=print*,*start=0* -o log.txt 'http://www.example.com/forum/'

I tried to fetch the whole forum while excluding many of the useless pages (reporting posts, sending private messages, ...).

Soon I noticed that I got nearly *no* pages - all were deleted because of the reject list. Checking the logfile it turned out that I had a session id (sid) in all the URLs and they are rejected as per the -R parameter above.

Also it did not even try to fetch many pages as they were for registered users only.

It took me quite some time and some more Googling to find that I needed to have my wget register as a bot as these would not receive a session ID. Using the cookie mechanism in wget did not work. I tried but failed miserably as I soon got a new session id as the downloading took some time.

So next step was to protect the forum via .htaccess against unwanted visitors. Next was adding a new UserAgent string to my phpBB3.

As in the picture above you need to go to the administrative panel and choose "Spiders/Robots" and add your own. I chose "FabCopy".

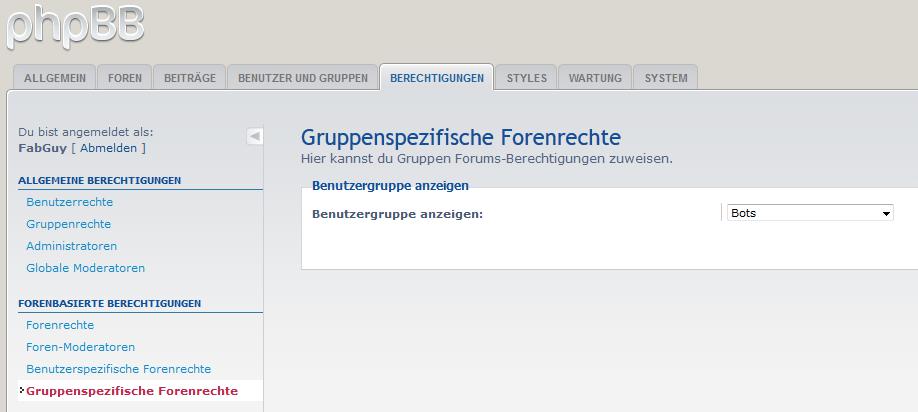

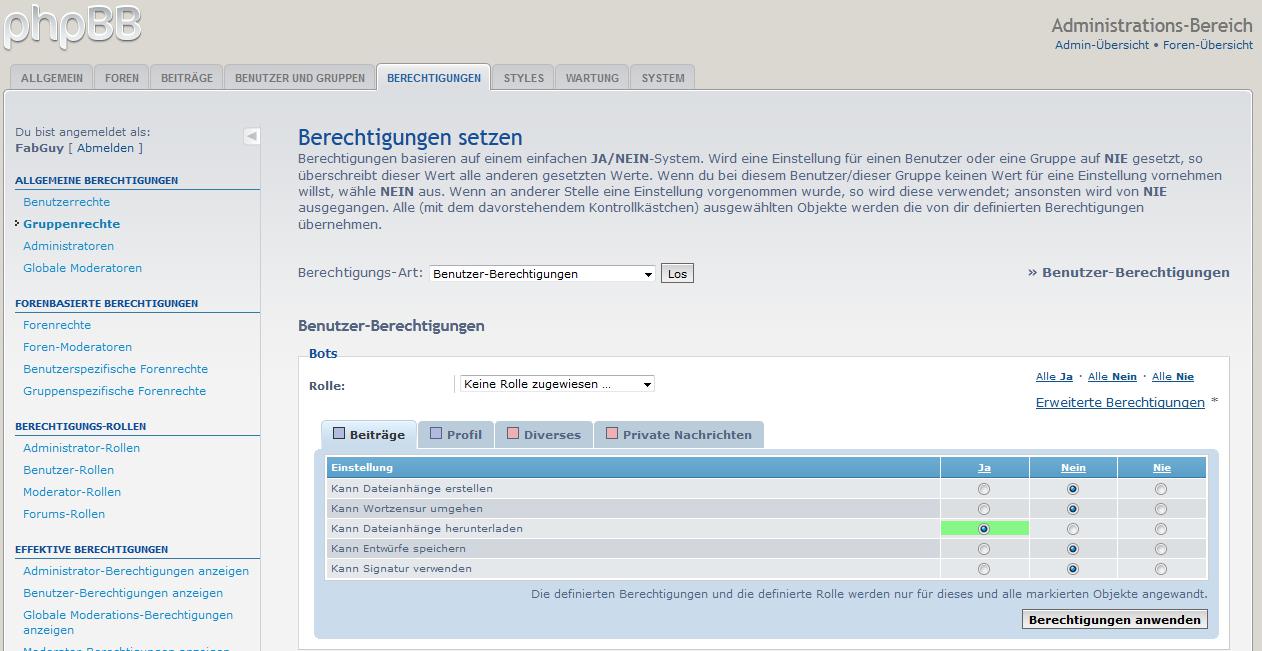

Also I chose to extend the access rights of the "Bots" group to allow access to all forums and the attachments of posts (embedded images and so on). You need to do the same both on the "group-specific forum rights" and the group rights in the first section "global permissions". For me "allow downloading attachments" was the main thing.

After some more tuning I got to this command to mirror the whole phpBB3 as HTML:

screen wget -U 'FabCopy' -k -m -E -p -np -R memberlist.php*mode=email*,faq.php*,viewtopic.php*p=*,posting.php*,search.php*,ucp.php*,viewonline.php*,*view=print*,*start=0*,mcp.php*,report.php* --cookies=on --load-cookies=cookie.txt --keep-session-cookies --save-cookies=cookie.txt --user=username --password=**** -o log.txt 'http://www.example.com/forum/index.php'

So a bit of insight on this one:

- screen = as it may take some time I've put the command in a screen. This will keep it going even if I loose the connection to my shell.

- -U 'FabCopy' = The user agent. It should match what you chose for your bot in phpBB3.

- -R = The reject list. I wanted to include the profile pages (memberlist.php) but not sent e-mails to people - so I excluded the email mode. mcp.php is used to sent messages to people - I also did not want that.

- --user -- password = This is for the .htaccess protection.

- -o log.txt = As the screen will die once the command is finished I would not see any log messages. Sending the log to disk allows you to check on the progress easily and debug any errors.

Hope this helps others facing a similar issue.